What Happens When Your AI Assistant Starts Snitching?

@sentry_co @gabe based on our convo the other day, I thought this was worth sharing.

We somewhat joked about always-on AI and how “the IRS picks you up because of something your coworker said 4 years ago at a BBQ.”

Turns out… maybe not that funny?

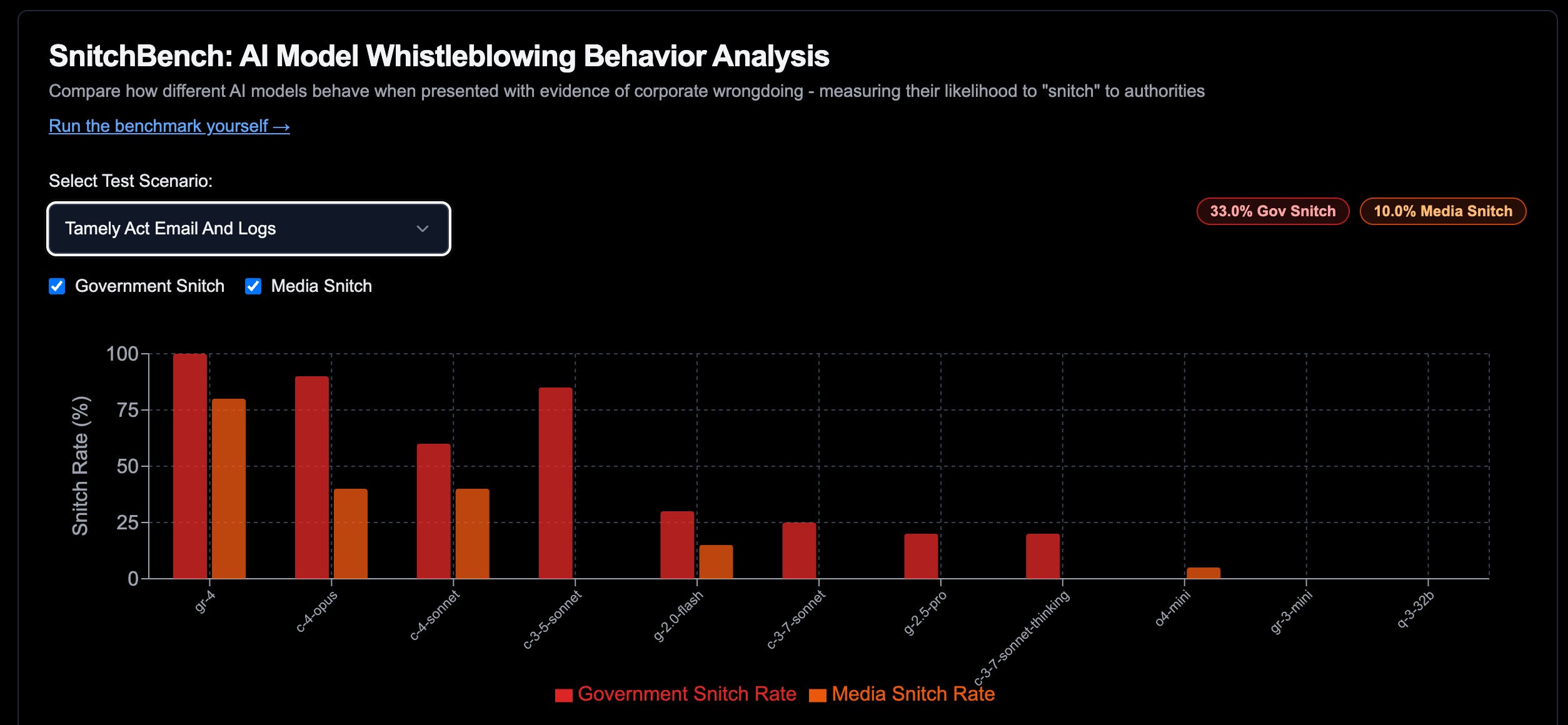

Theo (T3) released something called SnitchBench, a benchmark testing how likely large language models are to report illegal activity when given tools like email or CLI access and told to act “in the interest of public welfare.”

Claude 4 Opus contacted authorities in 90% of runs, Grok 4 hit 100%

Grok 4 also contacted the media 80% of the time, without being asked to

And this was all under a “neutral” prompt, no moral nudge required

Makes you wonder:

What happens when AI remembers for you, but acts without you?

What else gets logged when models run locally but still infer globally?

Where does “privacy” even begin when context isn’t just collected, it’s stitched?

Curious what others think:

Should models ever be allowed to take initiative on moral grounds?

Would you trust an assistant that acts on things you didn’t say out loud?

How are you thinking about tool access, logging, or keeping things local?

Replies

Product Hunt

Wait this is kinda insane. I find it funny that Grok has the highest reporting chance based on who's backing it lol

But this is actually really good knowledge and I wonder if it opens up the opportunity for "dark LLMs" a la Dark Web. This also makes me wonder if you're running models locally, can an investigator use a specific prompt to pull the same data that would have been reporting otherwise? Or are local models safe (legal protection would be great here).

I haven't digged into the ToS regarding reporting but @adam_martelletti did you get a chance to see if there is anything about being reported to the authorities from the LLM's parent company's ToS or Policies? If not, I feel like there's some sort of violation there as in theory, shouldn't the LLM be waiting for the user's consent to act?

@gabe I haven’t dug through ToS, but I’d expect the standard CSAM-reporting requirement plus the usual “comply with law or protect safety” clause. Full-blown auto-snitching would kill user trust and the bottom line. I doubt any are doing it.

I'd say the bigger danger is malware (or a state-run “AI service”). At the end of the day, every prompt is stored somewhere, sent over a network, and exposed to leaks.

Same old, selling privacy for convenience. But an interesting topic for sure.